One of the phases of operationalizing a data science project that is often overlooked is setting up continuous integration for the model api. In this example I will describe how we set up a CI pipeline using Docker and Jenkins for a basic flask api. You can find example code on our github.

Why Continuous Integration?

Continuous integration allows rapid testing and deployment of code changes through automation. When a codebase change is committed, it automatically builds the code in a new environment and runs a suite of automated tests - deploying the code only if the tests pass. This helps accelerate the operationalization part of our data science workflow and helps us mitigate risk from missing a test.

Our Continuous Integration Setup

- Docker: For building code and running tests in uniform containers

- Jenkins: For managing build and test pipelines

- Python 3

- Flask: For our api

- Pytest: For running automated tests

- Github: For storing project code

There are also other continuous integration solutions besides Jenkins - both circleci and travisci are fairly popular. We decided to use Jenkins because it gives us more control on exactly how we are setting up build and test environments, allows us to automate the creation of pipelines through Jenkinsfiles, and it integrates with several other popular software lifecycle management tools (like Jira).

Steps in our Pipeline

Our pipeline will run through the following steps to build and test our code:

- Jenkins grabs a fresh copy of code from github

- Jenkins tells Docker to build a new environment from a dockerfile

- Docker loads python dependencies for project

- Docker runs automated tests in pytest

- Jenkins reports and logs results of test

- Docker tears down container used for build and test

- First download docker and have the docker daemon running in your environment

-

Download jenkins container

docker pull jenkinsci/blueocean -

Create a bridge network for jenkins

docker network create jenkins -

Create Volumes for jenkins certificates

docker volume create jenkins-docker-certs docker volume create jenkins-data -

Run the docker instance that jenkins will use as a build server

docker container run --name jenkins-docker --rm --detach \ --privileged --network jenkins --network-alias docker \ --env DOCKER_TLS_CERTDIR=/certs \ --volume jenkins-docker-certs:/certs/client \ --volume jenkins-data:/var/jenkins_home \ --publish 2376:2376 docker:dind -

Run the docker instance of blue ocean (web ui for jenkins)

docker container run --name jenkins-blueocean --rm --detach \ --network jenkins --env DOCKER_HOST=tcp://docker:2376 \ --env DOCKER_CERT_PATH=/certs/client --env DOCKER_TLS_VERIFY=1 \ --volume jenkins-data:/var/jenkins_home \ --volume jenkins-docker-certs:/certs/client:ro \ --publish 8080:8080 --publish 50000:50000 jenkinsci/blueocean - Open localhost:8080/blue to set up our pipeline

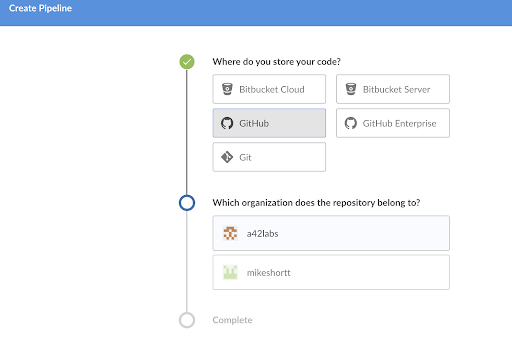

- Click "create a new pipeline"

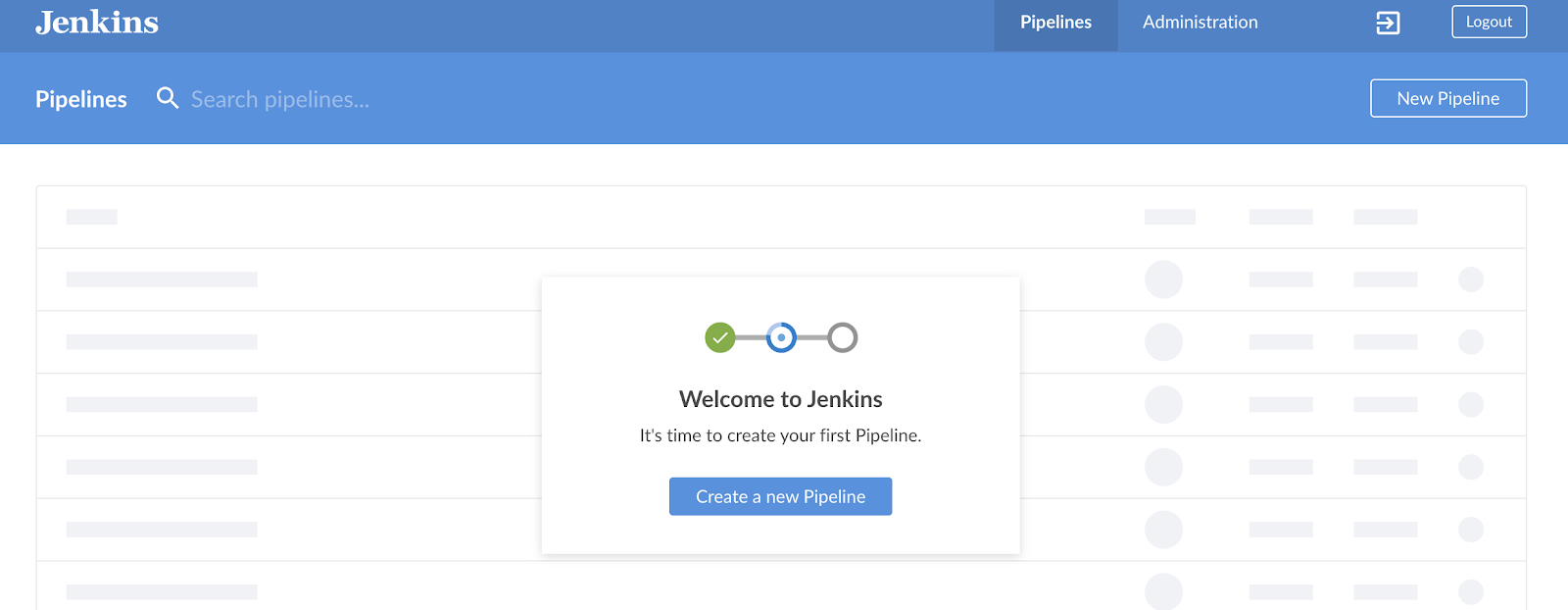

- Select GitHub as your repo

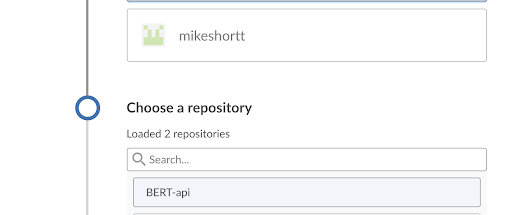

- Select the repository owner (in this case a42labs)

- Select our repository

- Click "create pipeline" and you're done! Jenkins reads the setup of the pipeline from the Jenkinsfile and automatically starts running it.

Our solution runs a docker container with a jenkins continuous integration server for running builds and tests of our code. There is a separate docker container running the jenkins plugin for Blue Ocean, which is a web-friendly UI that lets us set up continuous integration pipelines. Finally, when building our automated tests, it uses a Dockerfile to automatically build and teardown a docker environment for us to test in.

Setting up Jenkins

Setting up our Pipeline (with a Jenkinsfile)

There are several ways to build a pipeline in Jenkins, but using a Jenkinsfile allows us to automate the process of creating build and test pipelines by reading them from the Jenkinsfile file in the root of our GitHub repo. Here's the Jenkinsfile we're using for our api below. We'll go through each section of the file below.

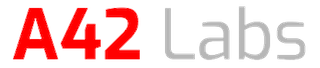

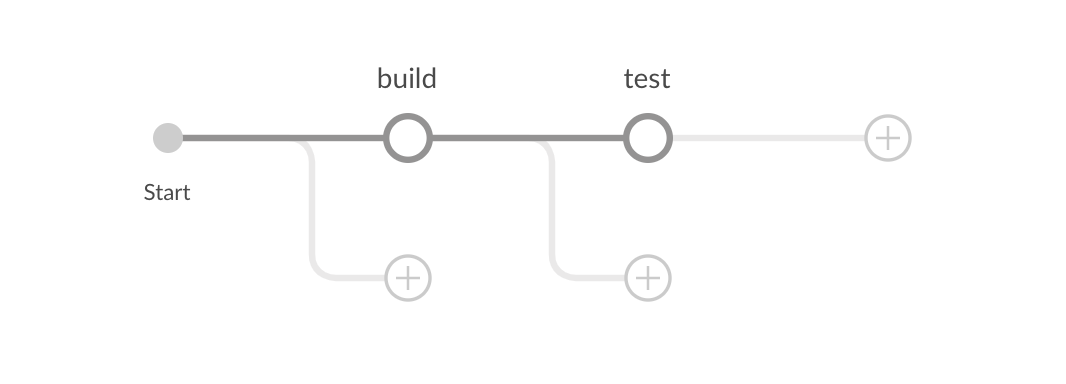

Here's Jenkins's diagram of the pipeline from our Jenkinsfile

pipeline {

agent {

dockerfile {

filename 'Dockerfile'

}

}

stages {

stage('build') {

steps {

git 'https://github.com/a42labs/ci-flask-api.git'

// sh 'pip install --user -r requirements.txt'

}

}

stage('test') {

steps {

sh 'echo "test"'

sh 'python -V'

sh 'python -m pytest'

}

}

}

}

Below I go through the various components of the Jenkinsfile above and what they're doing:

Pipeline/Agent

pipeline {

agent {

dockerfile {

filename 'Dockerfile'

}

}

This tells our Jenkins pipeline to look at the Dockerfile in the working directory to build the temporary docker container we'll use for testing

Build Step

stages {

stage('build') {

steps {

git 'https://github.com/a42labs/ci-flask-api.git'

// sh 'pip install --user -r requirements.txt'

}

}

This tells the Jenkins worker to checkout the most recent version of our code from our github repo and use that to build the script in the docker container.

Test Step

stage('test') {

steps {

sh 'echo "test"'

sh 'python -V'

sh 'python -m pytest'

}

}

}

}

This will write the words "test" to stdout as well as the current python version. It then runs pytest, which runs all of the tests in the ./test directory and logs if it passes or fails.

Conclusion

There are several ways to run a CI pipeline on your api - here we go through how to set one up with Jenkins, a tool commonly used by software engineering teams outside of data science.

If you're interested in help in building these kinds of solutions for your next data science project, reach out to the A42 Labs team at info@a42labs.io.